Maintenance

1. Update

To keep a Debian/Ubuntu system up to date, we usually do something like this:

apt update

apt upgrade

apt full-upgrade

apt dist-upgrade

What about Docker containers?

Usually it is not convenient to do that kind of update/upgrade inside a docker container. What we do instead is:

- pull an updated version of the base docker image

- rebuild the local image (if needed)

- rebuild the container

- etc.

We can do this kind of update/upgrade (build a new container and throw away the old one) because usually the data and configuration of the application are stored outside the container. So, the newly built container can reuse them. Or, in some cases, we can modify automatically the configuration of the new container (with the help of scripts).

Most of docker-scripts applications can be updated just with a ds make, which will rebuild the container, customize automatically its

configuration (if needed), and reuse the same data and/or configs

(which are stored on the directory of the container).

Some docker-scripts applications may be a bit different, or may

require additional steps. For example we may need to use ds remake

for some of them (instead of ds make), which usually makes a backup

of the application first, then rebuilds it (with ds make), and

finally restores the latest backup.

-

One of the steps of update is to update the code of the docker-scripts. It can be automated with a script like this:

cat << 'EOF' > /opt/docker-scripts/git.sh

#!/bin/bash

options=${@:-status --short}

for repo in $(ls -d */)

do

echo

echo "===> $repo"

cd $repo

git $options

cd -

done

EOF

nano /opt/docker-scripts/git.shcd /opt/docker-scripts/

nano git.sh

chmod +x git.sh

./git.sh

./git.sh pullUpdate (reinstall) docker-scripts:

cd ds/

make install -

Another step is to update the base Docker image (

debian/13):docker image ls

docker pull debian:13

docker pull debian:trixieAfter updating each container, we can clean up old images like this:

docker image prune --force

docker system prune --force -

This script lists all the update steps:

/var/ds/_scripts/update.sh#!/bin/bash -x

# update the system

apt update

apt upgrade --yes

#reboot

# get the latest version of scripts

cd /opt/docker-scripts/

./git.sh pull

# update ds

cd /opt/docker-scripts/ds/

make install

# get the latest version of the debian image

docker pull debian:13

docker pull debian:trixie

# run 'ds make' on these apps

app_list="

sniproxy

revproxy

nsd

wg1

mariadb

postgresql

smtp.user1.fs.al

wordpress1

"

for app in $app_list ; do

cd /var/ds/$app/

ds make

done

docker image prune --force

# nextcloud

cd /var/ds/cloud.user1.fs.al/

ds update

# wordpress

cd /var/ds/wordpress1/

ds site update site1.user1.fs.al

ds site update site2.user1.fs.al

# openldap

cd /var/ds/ldap.user1.fs.al/

ds backup

ds make

ds restore $(ls backup*.tgz | tail -1)

# asciinema

cd /var/ds/asciinema.user1.fs.al/

# https://github.com/asciinema/asciinema-server/releases

ds update # <release>

# clean up

docker image prune --force

docker system prune --forcenano /var/ds/_scripts/update.sh

chmod +x /var/ds/_scripts/update.shimportantIn theory, it is possible to run the script above and update everything automatically, creating also a cron job for it (why not?). And most of the times it would work perfectly. However it is not guaranteed to work every time: when you update, there is always a chance that something gets broken.

For this reason, I usually do the update scripts manually, one by one, checking also that everything is OK after the update, and taking the necessary steps to fix it if something gets broken. I use the script just to remind me of all the steps, and sometimes I also copy/paste commands from it.

Usually it does not take more than a couple of hours, and I update the containers once in a month or two.

-

The same script for the

educontainer looks like this:/var/ds/_scripts/update.sh#!/bin/bash -x

# update the system

apt update

apt upgrade --yes

#reboot

# get the latest version of scripts

cd /opt/docker-scripts/

./git.sh pull

# update ds

cd /opt/docker-scripts/ds/

make install

# get the latest version of the debian image

docker pull debian:13

docker pull debian:trixie

# run 'ds make' on these apps

for app in revproxy mariadb ; do

cd /var/ds/$app/

ds make

done

# run 'ds remake' on these apps

app_list="

vclab.user1.fs.al

mate1

raspi1

"

for app in $app_list ; do

cd /var/ds/$app/

ds remake

done

# moodle

cd /var/ds/edu.user1.fs.al/

ds update

ds remake

# clean up

docker system prune --force

2. Backup intro

2.1 Get more storage space

Let's get another volume on Hetzner. We can use it for backup etc.

Name it Storage, size 50 GB, formatted with ext4, and mounted

automatically.

From inside the VPS, we can change the mount point to /mnt/storage/:

lsblk

umount /dev/sdc

nano /etc/fstab

systemctl daemon-reload

mount -a

lsblk

2.2 Testing Borg

We are going to use BorgBackup, so let's try some tests in order to get familiar with it.

-

Prepare:

apt install borgbackup

mkdir tst

cd tst/ -

Define env variables

BORG_PASSPHRASEandBORG_REPO. They will be used by the commandborg.export BORG_PASSPHRASE='12345678'

export BORG_REPO='/mnt/storage/borg/repo1'

mkdir -p $BORG_REPO -

Initialize repo and export the key:

borg init -e repokey

borg key export > borg.test.repo1.key

cat borg.test.repo1.key -

Create archives:

borg create ::archive1 /etc

borg create --stats ::archive2 /etc

tree /mnt/storage/borgrepo1 -

List archives:

borg list

borg list ::archive1 -

Extract:

borg extract ::archive1

ls

ls etc

rm -rf etc -

Delete archives:

borg delete ::archive1

borg compact

borg list -

Delete borg repository:

borg delete

borg list

ls /mnt/storage/ -

Clean up:

cd ..

rm -rf tst/

See also the Quick Start tutorial of the BorgBackup.

3. Backup scripts

To backup all the installed docker-scripts apps, it is usually enough

to make a backup of the directories /opt/docker-scripts/ and

/var/ds/, since the data of the apps are usually stored on the host

system (in subdirectories of /var/ds/). It is also a good idea to

include /root/ in the backup, since it may contain useful things

(for example maintenance scripts).

We are going to use some bash scripts to automate the backup

process. Let's place these scripts on the directory /root/backup/.

mkdir -p /root/backup/

cd /root/backup/

3.1 The main script

This is the main backup script, that is called to backup everything:

#!/bin/bash -x

cd $(dirname $0)

MIRROR_DIR=${1:-/mnt/storage/mirror}

# mirror everything

./mirror.sh $MIRROR_DIR

# backup the mirror directory

./borg.sh $MIRROR_DIR

# backup the incus setup

./incus-backup.sh

nano backup.sh

chmod +x backup.sh

It has these main steps:

-

Mirror everything (

/root/,/var/ds/and/opt/docker-scripts/) to the directory/mnt/storage/mirror/. This is mainly done byrsync. -

Backup the mirror directory (

/mnt/storage/mirror)using BorgBackup. -

Unrelated to the first two steps, backup also the setup and configuration of Incus, which is located at

/var/lib/incus/. It is commented for the time being, but we will uncomment it later.

3.2 The mirror script

The script mirror.sh mirrors /root/, /var/ds/ and

/opt/docker-scripts/ to the directory /mnt/storage/mirror/.

backup/mirror.sh

#!/bin/bash -x

MIRROR_DIR=${1:-/mnt/storage/mirror}

_rsync() {

local src=$1

local dst=$2

local sync='rsync -avrAX --delete --links --one-file-system'

[[ -f ${src}_scripts/exclude.rsync ]] \

&& sync+=" --exclude-from=${src}_scripts/exclude.rsync"

$sync $src $dst

}

main() {

mirror_host

mirror_incus_containers

mirror_snikket

}

mirror_host() {

local mirror=${MIRROR_DIR}/host

# mirror /root/

mkdir -p $mirror/root/

_rsync /root/ $mirror/root/

# mirror /opt/docker-scripts/

mkdir -p $mirror/opt/docker-scripts/

_rsync \

/opt/docker-scripts/ \

$mirror/opt/docker-scripts/

# backup the content of containers

/var/ds/_scripts/backup.sh

# mirror /var/ds/

stop_docker

mkdir -p $mirror/var/ds/

_rsync /var/ds/ $mirror/var/ds/

start_docker

}

stop_docker() {

local cmd="$* systemctl"

$cmd stop docker

$cmd disable docker

$cmd mask docker

}

start_docker() {

local cmd="$* systemctl"

$cmd unmask docker

$cmd enable docker

$cmd start docker

}

mirror_incus_containers() {

local mirror

local container_list="edu"

for container in $container_list ; do

mirror=$MIRROR_DIR/$container

# mount container

mount_root_of_container $container

# mirror /root/

mkdir -p $mirror/root/

_rsync mnt/root/ $mirror/root/

# mirror /opt/docker-scripts/

mkdir -p $mirror/opt/docker-scripts/

_rsync \

mnt/opt/docker-scripts/ \

$mirror/opt/docker-scripts/

# backup the content of the docker containers

incus exec $container -- /var/ds/_scripts/backup.sh

# mirror /var/ds/

stop_docker "incus exec $container --"

mkdir -p $mirror/var/ds/

_rsync mnt/var/ds/ $mirror/var/ds/

start_docker "incus exec $container --"

# unmount container

unmount_root_of_container

done

}

mount_root_of_container() {

local container=$1

mkdir -p mnt

incus file mount $container/. mnt/ &

MOUNT_PID=$!

sleep 2

}

unmount_root_of_container() {

kill -9 $MOUNT_PID

sleep 2

rmdir mnt

}

mirror_snikket() {

local container=snikket

local mirror=$MIRROR_DIR/$container

# mount container

mount_root_of_container $container

# mirror /root/

stop_docker "incus exec $container --"

mkdir -p $mirror/root/

_rsync mnt/root/ $mirror/root/

start_docker "incus exec $container --"

# unmount container

unmount_root_of_container

}

### call main

main "$@"

wget https://linux-cli.fs.al/apps/part6/backup/mirror.sh

chmod +x mirror.sh

nano mirror.sh

This script is self-explanatory, easy to read and to understand what it is doing. Nevertheless, let’s discuss a few things about it.

-

The script does not only mirror the directories of the host, but also the directories

/root/,/var/ds/and/opt/docker-scripts/in some Incus containers (assuming that we have installed in them Docker and some docker-scripts apps). In this example script, there is only theeducontainer:local container_list="edu"If there are more Incus containers, their names can be added to the list (separated by spaces).

-

Before mirroring

/var/ds/, we run the script/var/ds/_scripts/backup.sh. This script may run the commandds backupon some of the applications. This is needed only for those apps where the content of the app directory is not sufficient for making a successful restore of the application. Usually those are the apps where we would use ads remaketo update, instead of ads make. So, we make these docker-scripts backups, before making a mirror of the directory and a backup to the Storage. This is the backup script on the host:/var/ds/_scripts/backup.sh#!/bin/bash -x

rm /var/ds/*/logs/*.out

# openldap

cd /var/ds/ldap.user1.fs.al/

ds backup

find . -type f -name "backup*.tgz" -mtime +5 -deletechmod +x /var/ds/_scripts/backup.sh

nano /var/ds/_scripts/backup.shOnly for the OpenLDAP container we need to make a backup before mirroring.

We use a similar backup script inside the container

edu:/var/ds/_scripts/backup.sh#!/bin/bash -x

rm /var/ds/*/logs/*.out

# guacamole

cd /var/ds/vclab.user1.fs.al/

ds backup

find . -type f -name "backup*.tgz" -mtime +5 -delete

# linuxmint

cd /var/ds/mate1/

ds users backup

find backup/ -type f -name "*.tgz" -mtime +5 -delete

# raspberrypi

cd /var/ds/raspi1/

ds users backup

find backup/ -type f -name "*.tgz" -mtime +5 -delete

# moodle

cd /var/ds/edu.user1.fs.al/

ds backup

find . -type f -name "backup*.tgz" -mtime +5 -deletechmod +x /var/ds/_scripts/backup.sh

nano /var/ds/_scripts/backup.sh -

Before mirroring

/var/ds/which contains the applications and their data, we make sure to stop Docker, which in turn will stop all the applications. If the data on the disk are constantly changing while we make the mirror, we may get a "mirror" with inconsistent data. -

For the Incus containers, we mount the filesystem of the container to a directory on the host, before mirroring, and unmount it afterwards.

-

For the Incus container

snikket, we mirror only the/root/directory, which also contains/root/snikket/and all the data of the application).

The Incus command incus file mount depends on sshfs, so we should

make sure that it is installed:

apt install sshfs

Let's try it now:

./mirror.sh

tree /mnt/storage/mirror -d -L 2

tree /mnt/storage/mirror -d -L 3

tree /mnt/storage/mirror -d -L 4 | less

du -hs /mnt/storage/mirror

du -hs /mnt/storage/mirror/*

3.3 The borg script

This script makes a backup of the mirror directory to the Borg repository.

backup/borg.sh

#!/bin/bash

export BORG_PASSPHRASE="quoa9bohCeer1ad8bait"

export BORG_REPO='/mnt/storage/borg/mycloud'

### to initialize the repo run this command (only once)

#borg init -e repokey

#borg key export

dir_to_backup=${1:-/mnt/storage/mirror}

# some helpers and error handling:

info() { printf "\n%s %s\n\n" "$( date )" "$*" >&2; }

trap 'echo $( date ) Backup interrupted >&2; exit 2' INT TERM

info "Starting backup"

borg create \

--stats \

--show-rc \

::'{hostname}-{now}' \

$dir_to_backup

backup_exit=$?

info "Pruning repository"

borg prune \

--list \

--glob-archives '{hostname}-*' \

--show-rc \

--keep-daily 7 \

--keep-weekly 4 \

--keep-monthly 6

prune_exit=$?

info "Compacting repository"

borg compact

compact_exit=$?

# use highest exit code as global exit code

global_exit=$(( backup_exit > prune_exit ? backup_exit : prune_exit ))

global_exit=$(( compact_exit > global_exit ? compact_exit : global_exit ))

if [ ${global_exit} -eq 0 ]; then

info "Backup, Prune, and Compact finished successfully"

elif [ ${global_exit} -eq 1 ]; then

info "Backup, Prune, and/or Compact finished with warnings"

else

info "Backup, Prune, and/or Compact finished with errors"

fi

exit ${global_exit}

wget https://linux-cli.fs.al/apps/part6/backup/borg.sh

chmod +x borg.sh

nano borg.sh

The main commands of this script are borg create, borg prune and

borg compact. The last part of the script just shows the status of

these commands.

The script makes sure to keep a backup only for the last 7 days, the last 4 weeks, and the last 6 months (17 backups in total). This prevents the size of the backups from growing without limits. Borg also uses deduplication, compression and compact to keep the size of the backup storage as small as possible.

Borg commands usually need to know on which borg repository they should work, and the passphrase that is used to encrypt that repository. These may be specified on the command line, or with environment variables. We are using environment variables because this is more convenient for a script:

export BORG_PASSPHRASE='XXXXXXXXXXXXXXXX'

export BORG_REPO='/var/storage/borg/mycloud'

-

Before using the borg script, it is necessary to initialize a borg repository for the backup. It can be done like this:

pwgen 20

export BORG_PASSPHRASE='XXXXXXXXXXXXXXXXXXXX'

export BORG_REPO='/mnt/storage/borg/mycloud'

mkdir -p $BORG_REPO

borg init -e repokey -

Save the passphrase and the key.

borg key export > borg.mycloud.keyimportantThe repository data is totally inaccessible without the key and the passphrase. So, make sure to save them in a safe place, outside the server and outside the Storage.

-

Copy the passphrase that was used to initialize the repo to the script

borg.sh, and test it:nano borg.sh

./borg.sh

ls /mnt/storage/borg/

du -hs /mnt/storage/borg/mycloud/

borg list

borg list ::mycloud-2025-04-27T15:04:54 | less

3.4 Run them periodically

We want to run the script backup.sh periodically, but first let's

test it, to make sure that it runs smoothly:

./backup.sh

borg list

du -hs /mnt/storage/*

df -h /mnt/storage/

To run it periodically, let's create a cron job like this:

cat <<'EOF' > /etc/cron.d/backup

30 3 * * * root bash -l -c "/root/backup/backup.sh &> /dev/null"

EOF

It runs the script every night at 3:30. The borg script takes care to keep only the latest 7 daily backups, the latest 4 weekly backups, and the latest 6 monthly backups. This makes sure that the size of the backups (on the Storage) does not grow without limits. Borg also uses deduplication and compression for storing the backups, and this helps to reduce further the size of the backup data.

We are using bash -l -c to run the command in a login

shell. This is because the default PATH variable in the cron

environment is limited and some commands inside the script will fail

to execute because they cannot be found. By running it with bash -l -c we make sure that it is going to have the same environment

variables as when we execute it from the prompt.

We are also using &> /dev/null in order to ignore all the stdout

and stderr output. If the cron jobs produce some output, the cron

will try to notify us by email. Usually we would like to avoid this.

3.5 Restore an app�

Let's assume that we want to restore the application /var/ds/wg1/.

-

First, we extract it from the Borg archive to a local directory:

cd ~

nano backup/borg.sh

export BORG_PASSPHRASE='quoa9bohCeer1ad8bait'

export BORG_REPO='/mnt/storage/borg/mycloud'

borg list

borg list ::mycloud-2025-04-27T15:04:54 | less

borg list \

::mycloud-2025-04-27T15:04:54 \

mnt/storage/mirror/host/var/ds/wg1/

borg extract \

::mycloud-2025-04-27T15:04:54 \

mnt/storage/mirror/host/var/ds/wg1/

ls mnt/storage/mirror/host/var/ds/wg1/ -

Remove the current app (if installed):

cd /var/ds/wg1/

ds remove

cd ..

rm -rf wg1/ -

Copy/move the directory that was extracted from the backup archive:

cd /var/ds/

mv ~/mnt/storage/mirror/host/var/ds/wg1/ .

rm -rf ~/mnt/storage/ -

Rebuild the app:

cd wg1/

ds makeUsually this is sufficient for most of the apps, because the necessary settings, data, configurations etc. are already in the directory of the application.

In some cases, if there is a

.tgzbackup file, we may also need to do ads restore:ds restore backup*.tgz

4. Maintain Incus

4.1 Backup

This is done by the script incus-backup.sh:

backup/incus-backup.sh

#!/bin/bash -x

main() {

backup_incus_config

snapshot_containers

#export_containers

}

backup_incus_config() {

local incus_dir="/var/lib/incus"

local destination="/mnt/storage/backup/incus"

mkdir -p $destination

incus admin init --dump > $incus_dir/incus.config

incus --version > $incus_dir/incus.version

incus list > $incus_dir/incus.instances.list

rsync \

-arAX --delete --links --one-file-system --stats -h \

--exclude disks \

$incus_dir/ \

$destination

}

snapshot_containers() {

# get the day of the week, like 'Monday'

local day=$(date +%A)

# get a list of all the container names

local containers=$(incus list -cn -f csv)

# make a snapshot for each container

for container in $containers; do

incus snapshot create $container $day --reuse

done

}

export_containers(){

local dir='/mnt/storage/incus-export'

mkdir -p $dir

# clean up old export files

find $dir/ -type f -name "*.tar.xz" -mtime +5 -delete

# get list of containers to be exported

# local container_list=$(incus list -cn -f csv)

local container_list="name1 name2 name3"

# export containers

for container in $container_list; do

incus export $container \

$dir/$container-$(date +'%Y-%m-%d').tar.xz \

--optimized-storage

done

}

# call main

main "$@"

wget https://linux-cli.fs.al/apps/part6/backup/incus-backup.sh

chmod +x incus-backup.sh

nano incus-backup.sh

The Incus directory is located at /var/lib/incus/. When we make a

mirror of this directory to the Storage (with rsync), we exclude the

subdirectory disks/, which contains the storage devices, where are

stored the Incus containers etc. Instead, we are making backups of the

Incus containers separately.

We are also making a daily snapshot of all the containers. We are doing it in such a way that only the last 7 daily snapshots are preserved.

The function export_containers uses the command incus export (with

the option --optimized-storage), to create a compressed archive of

the filesystem of the container. The archives older than 4-5 days are

removed. Not all the containers need a full backup like this, because

for some of them we actually backup only the important configurations

and data. (Actually, in our case, none of the containers need a full

backup like this, that's why the function is commented out.)

4.2 Restore

A restore can be done manually, with commands like these:

-

Install the same version of Incus as that of the backup:

dir='/mnt/storage/backup/incus'

ls $dir/incus.*

cat $dir/incus.version

apt install --yes incus -

Initialize incus with the same config settings as the backup:

nano $dir/incus.config

cat $dir/incus.config | incus admin init --preseed

incus list -

Import any instances from backup:

dir='/mnt/storage/incus-export'

ls $dir/*.tar.gz

incus import $dir/name1-2025-04-28.tar.xz

incus list

incus start name1

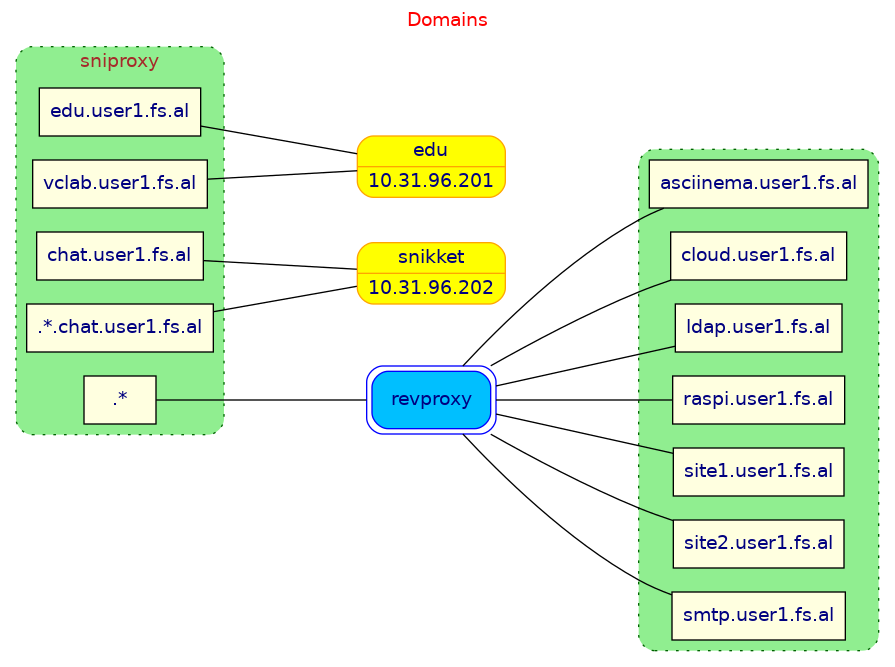

5. Visualize

We can build some GraphViz diagrams that might help up visualize what we have done on the server. It can be done with bash scripts.

First, let's install GraphViz:

apt install graphviz

5.1 Port forwarding

This script builds a graph that shows port forwarding on the server. Usually the open ports:

- go to the host (the ports on the public zone of

firewalld) - are forwarded to an Incus container

- are forwarded to a Docker container

visualize/gv-ports.sh

#!/bin/bash

main() {

local file_gv=${1:-/dev/stdout}

# get container name from ip

declare -A container_name

for container in $(get_containers); do

ip=$(get_ip $container)

container_name[$ip]=$container

done

print_gv_file $file_gv

}

get_containers() {

incus ls -f csv -c ns \

| grep RUNNING \

| sed 's/,RUNNING//'

}

get_ip() {

local container=$1

if [[ -z $container ]]; then

ip route get 8.8.8.8 | head -1 | sed 's/.*src //' | cut -d' ' -f1

else

incus exec $container -- \

ip route get 8.8.8.8 | head -1 | sed 's/.*src //' | cut -d' ' -f1

fi

}

print_gv_file() {

local file_gv=${1:-/dev/stdout}

cat <<EOF > $file_gv

digraph {

graph [

rankdir = LR

ranksep = 1.5

concentrate = true

fontname = system

fontcolor = brown

]

// all the ports that are accessable in the server

subgraph ports {

graph [

cluster = true

//label = "Ports"

style = "rounded, dotted"

]

node [

fillcolor = lightyellow

style = "filled"

fontname = system

fontcolor = navy

]

// ports that are forwarded to the host itself

// actually they are the ports that are open in the firewall

subgraph host_ports {

graph [

cluster = true

style = "rounded"

color = darkgreen

bgcolor = lightgreen

label = "firewall-cmd --list-ports"

]

$(host_port_nodes)

}

// ports that are forwarded to the docker containers

subgraph docker_ports {

graph [

cluster = true

label = "docker ports"

style = "rounded"

bgcolor = lightblue

color = steelblue

]

$(docker_port_nodes)

}

// ports that are forwarded to the incus containers

// with the command: 'incus network forward ...'

subgraph incus_ports {

graph [

cluster = true

label = "incus network forward"

style = "rounded"

bgcolor = pink

color = orangered

]

$(incus_port_nodes)

}

}

Host [

label = "Host: $(hostname -f)"

margin = 0.2

shape = box

peripheries = 2

style = "filled, rounded"

color = red

fillcolor = orange

fontname = system

fontcolor = navy

]

// docker containers

subgraph docker_containers {

graph [

cluster = true

label = "Docker"

style = "rounded"

]

node [

shape = record

style = "filled, rounded"

color = blue

fillcolor = deepskyblue

fontname = system

fontcolor = navy

]

$(docker_container_nodes)

}

// incus containers

subgraph incus_containers {

graph [

cluster = true

label = "Incus"

style = "rounded"

]

node [

shape = record

style = "filled, rounded"

color = orange

fillcolor = yellow

fontname = system

fontcolor = navy

]

$(incus_container_nodes)

}

edge [

dir = back

arrowtail = odiamondinvempty

]

$(host_edges)

$(docker_edges)

$(incus_edges)

graph [

labelloc = t

//labeljust = r

fontcolor = red

label = "Port forwarding"

]

}

EOF

}

print_port_node() {

local protocol=$1

local port=$2

cat <<EOF

"$protocol/$port" [ label = <$protocol <br/> <b>$port</b>> ];

EOF

}

host_port_nodes() {

echo

local ports=$(firewall-cmd --list-ports --zone=public)

for p in $ports; do

protocol=$(echo $p | cut -d'/' -f2)

port=$(echo $p | cut -d'/' -f1)

print_port_node $protocol $port

done

}

host_edges() {

echo

local ports=$(firewall-cmd --list-ports --zone=public)

for p in $ports; do

protocol=$(echo $p | cut -d'/' -f2)

port=$(echo $p | cut -d'/' -f1)

cat <<EOF

"$protocol/$port" -> Host;

EOF

done

}

docker_port_nodes() {

echo

docker container ls -a --format "{{.Names}} {{.Ports}}" \

| grep 0.0.0.0 \

| sed -e 's/,//g' \

| tr ' ' "\n" \

| grep 0.0.0.0 \

| cut -d: -f2 \

| \

while read line; do

port=$(echo $line | cut -d'-' -f1)

protocol=$(echo $line | cut -d'/' -f2)

print_port_node $protocol $port

done

}

docker_container_nodes() {

echo

docker container ls -a --format "{{.Names}} {{.Ports}}" \

| grep 0.0.0.0 \

| cut -d' ' -f1 \

| \

while read name; do

echo " \"$name\";"

done

}

docker_edges() {

echo

docker container ls -a --format "{{.Names}} {{.Ports}}" \

| grep 0.0.0.0 \

| sed -e 's/,//g' \

| \

while read line; do

container=$(echo $line | cut -d' ' -f1)

echo $line | tr ' ' "\n" | grep 0.0.0.0 | cut -d: -f2 | \

while read line1; do

port=$(echo $line1 | cut -d'-' -f1)

protocol=$(echo $line1 | cut -d'/' -f2)

target=$(echo $line1 | cut -d'>' -f2 | cut -d'/' -f1)

[[ $target == $port ]] && target=''

cat <<EOF

"$protocol/$port" -> "$container" [ label = "$target" ];

EOF

done

done

}

incus_port_nodes() {

echo

get_forward_lines | \

while read line; do

eval "$line"

target_port=${target_port:-$listen_port}

#echo $description $protocol $listen_port $target_port $target_address

print_port_node $protocol $listen_port

done

}

get_forward_lines() {

local listen_address=$(get_ip)

incus network forward show incusbr0 $listen_address \

| sed -e '/^description:/d' \

-e '/^config:/d' \

-e '/^ports:/d' \

-e '/^listen_address:/d' \

-e '/^location:/d' \

| tr -d '\n\r' \

| sed -e 's/- description:/\ndescription:/g' \

| sed -e 1d \

| sed -e 's/: /=/g'

}

incus_container_nodes() {

echo

for container in $(get_containers); do

ip=$(get_ip $container)

cat <<EOF

$container [ label = "$container|<ip> $ip" ];

EOF

done

}

incus_edges() {

echo

get_forward_lines | \

while read line; do

eval "$line"

#echo $description $protocol $listen_port $target_port $target_address

container=${container_name[$target_address]}

cat <<EOF

"$protocol/$listen_port" -> $container:ip [ label = "$target_port" ];

EOF

done

}

### call main

main "$@"

wget https://linux-cli.fs.al/apps/part6/visualize/gv-ports.sh

./gv-ports.sh ports.gv

dot -Tpng ports.gv -o ports.png

It should look like this:

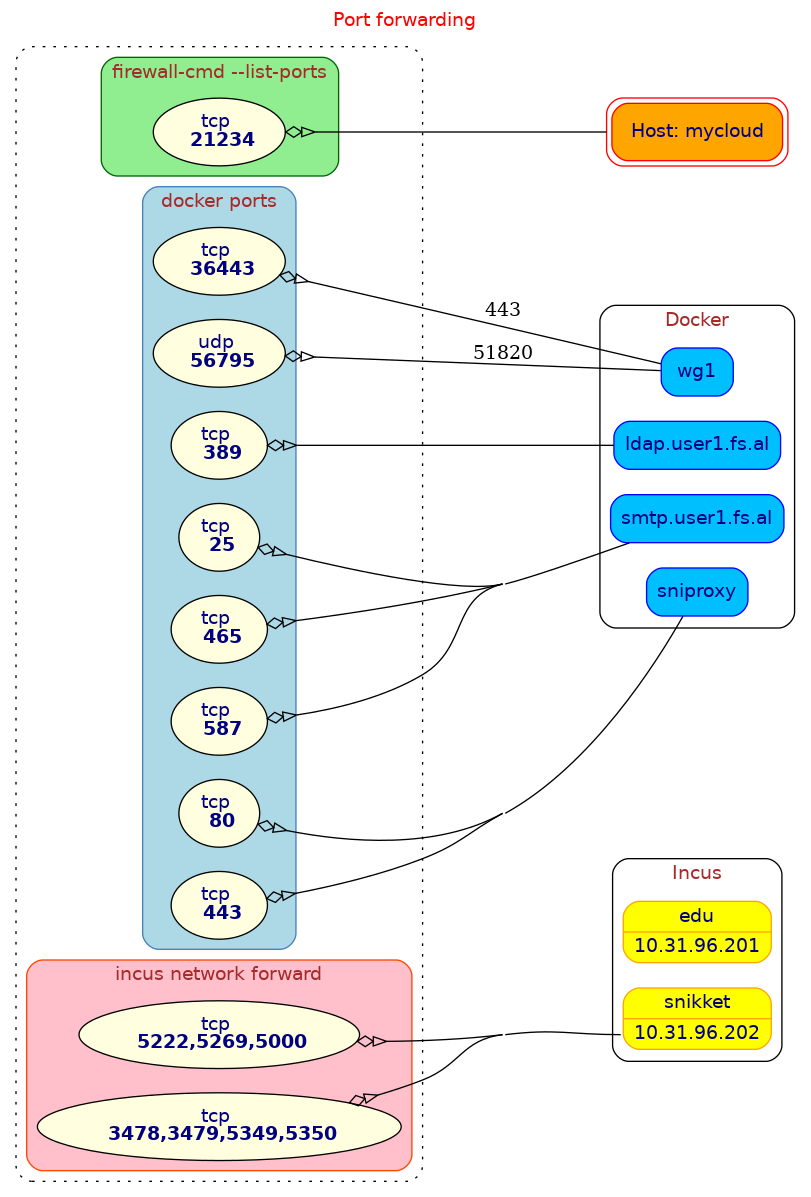

5.2 Web domains

The web domains hosted on the server are handled by sniproxy and

revproxy. The first one, sniproxy, redirects some of the domains

to Incus containers, and the rest to revproxy (which is based on

nginx). Then, revproxy redirects these domains to docker containers.

visualize/gv-domains.sh

#!/bin/bash

main() {

local file_gv=${1:-/dev/stdout}

# get container name from ip

declare -A container_name

for container in $(get_containers); do

ip=$(get_ip $container)

container_name[$ip]=$container

done

print_gv_file $file_gv

}

get_containers() {

incus ls -f csv -c ns \

| grep RUNNING \

| sed 's/,RUNNING//'

}

get_ip() {

local container=$1

if [[ -z $container ]]; then

ip route get 8.8.8.8 | head -1 | sed 's/.*src //' | cut -d' ' -f1

else

incus exec $container -- \

ip route get 8.8.8.8 | head -1 | sed 's/.*src //' | cut -d' ' -f1

fi

}

print_gv_file() {

local file_gv=${1:-/dev/stdout}

cat <<EOF > $file_gv

digraph {

graph [

rankdir = LR

ranksep = 1.5

concentrate = true

fontname = system

fontcolor = brown

]

node [

shape = box

]

// domain entries of sniproxy

subgraph sniproxy_domains {

graph [

cluster = true

label = sniproxy

color = darkgreen

bgcolor = lightgreen

style = "rounded, dotted"

]

node [

fillcolor = lightyellow

style = "filled"

fontname = system

fontcolor = navy

]

$(sniproxy_domains)

}

// domain entries of revproxy

subgraph revproxy_domains {

graph [

cluster = true

color = darkgreen

bgcolor = lightgreen

style = "rounded, dotted"

]

node [

fillcolor = lightyellow

style = "filled"

fontname = system

fontcolor = navy

]

$(revproxy_domains)

}

revproxy [

label = "revproxy"

margin = 0.2

shape = box

peripheries = 2

style = "filled, rounded"

color = blue

fillcolor = deepskyblue

fontname = system

fontcolor = navy

]

node [

shape = record

style = "filled, rounded"

color = orange

fillcolor = yellow

fontname = system

fontcolor = navy

]

$(incus_container_nodes)

edge [

arrowhead = none

]

$(sniproxy_edges)

$(revproxy_edges)

graph [

labelloc = t

//labeljust = r

fontcolor = red

label = "Domains"

]

}

EOF

}

sniproxy_domains() {

echo

cat /var/ds/sniproxy/etc/sniproxy.conf \

| sed -e '1,/table/d' \

| sed -e '/}/,$d' -e '/# /d' -e '/^ *$/d' \

| \

while read line; do

domain=$(echo "$line" | cut -d' ' -f1)

echo " \"$domain\";"

done

}

revproxy_domains() {

echo

for domain in $(ds @revproxy domains-ls); do

echo " \"$domain\";"

done

}

incus_container_nodes() {

echo

for container in $(get_containers); do

ip=$(get_ip $container)

cat <<EOF

$container [ label = "$container|<ip> $ip" ];

EOF

done

}

sniproxy_edges() {

echo

cat /var/ds/sniproxy/etc/sniproxy.conf \

| sed -e '1,/table/d' \

| sed -e '/}/,$d' -e '/# /d' -e '/^ *$/d' \

| \

while read line; do

domain=$(echo "$line" | xargs | cut -d' ' -f1)

target=$(echo "$line" | xargs | cut -d' ' -f2)

container=${container_name[$target]}

[[ -z $container ]] && container=$target

echo " \"$domain\" -> \"$container\";"

done

}

revproxy_edges() {

echo

for domain in $(ds @revproxy domains-ls); do

echo " revproxy -> \"$domain\";"

done

}

### call main

main "$@"

wget https://linux-cli.fs.al/apps/part6/visualize/gv-domains.sh

./gv-domains.sh domains.gv

dot -Tpng domains.gv -o domains.png

It should look like this: